D.A.G.G.E.R. Versus Filecoin

Introduction

People often refer to SHDW as the 'Filecoin of Solana,' and we consider that a compliment. Being likened to such an impactful project resonates with our commitment to decentralized storage and shared vision of blazing new trails. However, it's essential to acknowledge that there's much more under the hood of D.A.G.G.E.R and shdwDrive than this simple comparison to Filecoin suggests. It's time; let's dive into another installment of the D.A.G.G.E.R. Versus series!

Friends, technophiles, and blockchain enthusiasts welcome back to the decentralized storage arena! Today's face-off introduces a well-known challenger to our proprietary D.A.G.G.E.R. protocol. It's a rumble between the daring disruptor, GenesysGo's Directed Acyclic Gossip Graph Enabling Replication Protocol (D.A.G.G.E.R), and the legacy heavyweight, Filecoin. If comparisons are the sincerest form of flattery, then strap in as we dissect the likening of D.A.G.G.E.R. and shdwDrive to Filecoin.

In this tech tussle, we’ll illustrate not just the shared vision of both contenders to revolutionize cloud storage but also the stark contrasts that set them leagues apart. We’ll stay focused on design features that aid our discussion on consensus technology and design philosophy. Tokenomics of $FIL versus $SHDW, reward structures, and cost models are reserved for a future series more centered around shdwDrive rather than D.A.G.G.E.R.

Ready your ringside seats and prepare as we break down design choices influencing user experience, scrutinize the effect of underlying consensus mechanisms on performance, compare marketplaces to on-demand cloud, and spotlight why being part of the Solana ecosystem means scaling applications at enterprise speeds. From Filecoin’s reliance on off-chain retrieval markets to the quirky suggestion that users should physically ship hard drives for larger data hauls—expect some surprises in this showdown.

Overview of Designs

ShdwDrive/D.A.G.G.E.R. (by GenesysGo) and Filecoin/IPFS (by Protocol Labs) was conceived with an earnest desire to democratize cloud revenues. They share a vision to tap into the untapped potential of idle resources scattered among regular users wanting to contribute to a decentralized storage network. Despite their congruent aspirations, the design choices made by each protocol are tellingly divergent, shaping distinct user experiences and impacting performance facets such as speed, latency, and scalability.

Protocol Labs Framework:

- IPFS (InterPlanetary File System): Functions as the data distribution layer, utilizing a distributed network to locate and deliver files based on unique content identifiers.

- Filecoin: Acts as the blockchain-enabled payment layer, providing an incentivization and transaction system for storage and retrieval services on top of IPFS.

- IPC (InterPlanetary Consensus): Serves as a data scalability solution for the Filecoin Virtual Machine (FVM) and Filecoin Ethereum Virtual Machine (FEVM), implementing the Tendermint Core for execution of “scalable recursive subnets.”

GenesysGo Framework:

- D.A.G.G.E.R.: Powers shdwDrive as both the data distribution mechanism and consensus engine, optimized for rapid data access and file processing.

- shdwDrive: Offers decentralized storage services, leveraging the speed and efficiency of D.A.G.G.E.R. for a CDN-like, on-demand experience.

- Solana Blockchain and Virtual Machine (SVM) Integration: Provides a scalable environment for high-speed computation and payment for shdwDrive users, eliminating the need for additional recursive subnet layers, rollups, and other complications to scale smart contract execution.

Smart Contract Integrations:

GenesysGo has chosen to integrate with the Solana Virtual Machine, underscoring a commitment to performance and streamlined user experience, opening opportunities for organic growth and scaling directly in sync with the flourishing Solana ecosystem. Extending this feature, shdwDrive/D.A.G.G.E.R. may further benefit from its compatibility with any virtual machine or payment layer if necessary (including USD). This flexibility allows for potential expansion and the creation of cross-chain solutions that align closely with developers’ needs and grow adoption. Combined, you have a fully data-scalable developer environment by using two elegant and fast consensus mechanisms: D.A.G.G.E.R. and Solana.

In contrast, Filecoin opted for their own Filecoin Virtual Machine (FVM) and Ethereum-compatible FEVM which together have fostered critical storage support for many of the legacy chains over the years. Filecoin’s widespread adoption and pioneer status has led to their realization of certain scaling bottlenecks with data-centric applications built using FVM. Improvement to their virtual machine layers will be approached by creating IPC, which uses the Tendermint consensus engine to spawn multiple recursive subnets to help scale. As we all learned from the previous D.A.G.G.E.R. versus Tendermint article, this choice has its own set of challenges, which we have discussed in detail here.

Consensus and Operations

Filecoin leverages dual consensus mechanisms, encompassing Proof of Replication (PoRep) and Proof of Spacetime (PoSt), executed within the framework of Expected Consensus (EC), all of which align with the principle of 'useful work' as per the Filecoin whitepaper. PoRep ensures miners create and maintain unique, verifiable replicas of data, while PoSt verifies that miners continuously store this data over time.

Expected Consensus (EC) engineered to harness the power of probabilistic selection and parallelism in the leadership process. At the heart of this method lies the Power Fault Tolerance and Mining Power, which democratizes block leadership by linking a miner's influence directly to their storage contributions, a publicly verifiable metric on the blockchain. Miners engage in a continuous process of proving their storage legitimacy through Proof-of-Spacetime (PoSt), a mechanism that enforces honesty and accountability. Leadership election within this framework is independent and non-synchronous; each miner, using a Verifiable Random Function (VRF) that incorporates unique input data, autonomously determines if they are the leader for a particular epoch without the need for active, coordinated agreement. This allows multiple leaders to simultaneously emerge across epochs (all leaders within an epoch forming a tipset), imbuing the blockchain with robustness and reducing the chances of any single leader's failure affecting the entire network’s consensus.

Despite the distributed concurrency afforded by EC, Filecoin's holistic protocol operation reveals several critical intervals that necessitate coordinated actions — moments which introduce latency and define its runtime as operationally synchronous. For instance, the Windowed Proof-of-Spacetime mandates miners to submit proofs within prescribed deadlines, enforcing a synchronized commitment across the network. Block propagation relies on the complex dynamics of GossipSub, which requires nodes to actively share and manage state information, implicitly coordinating to maintain block propagation continuity. The Tipset architecture involves inter-block coordination to choose the canonical chain during forks. The storage market's functionality exhibits additional synchronization: Discovery and negotiation phases predicate on clients and miners engaging in synchronous interactions to finalize deal terms before committing to the “Storage Market Actor,” and subsequent data transfers are meticulously recorded on-chain. Fault management within the mining subsystem also necessitates aligning and executing corrective actions, signifying deliberate coordination among nodes to ensure sectors' validity. These necessary moments punctuate Filecoin's sequence of operations, establishing a rhythm of interactions that still rely on crucial rounds of synchronization, deviating from an entirely asynchronous operational paradigm.

This approach by Filecoin underscores a different operational paradigm from a novel, completely asynchronous, leaderless runtime, such as D.A.G.G.E.R., that focuses on raw data ingestion (throughput) and reducing latency.

Throughput:

Filecoin's operation involves a structured system that partially relies on moments of coordination. The Expected Consensus protocol in Filecoin probabilistically selects leaders to validate and bundle transactions into blocks termed tipsets, however, block propagation via gossip and marketplace activities are examples that slow down the overall operational throughput of the system.

D.A.G.G.E.R., with its fully asynchronous and leaderless model, forgoes the concept of leader election entirely, thus enabling immediate transaction processing across a graph-based consensus topology foregoing the needs for block finalization, block height alignments, or inter-block tipset coordinations. Furthermore, the consensus runtime completely de-couples transaction ordering from order execution, offering increased independence of modules. Wield node selection is automated and distributed based on node capacity, geography, randomization algorithms, and “standing,” removing the reliance on marketplace order matching engines and deal-making. As a result, such systems demonstrate enhanced efficiency and scalability, especially for processing high volumes of data swiftly.

Latency:

Latency, which is the time taken to achieve consensus and validate transactions within a network, is a critical factor influencing blockchain performance. The asynchronous, leaderless system employed by D.A.G.G.E.R. is crafted to minimize this latency, with nodes immediately processing transactions and independently appending them to the ledger. Filecoin's protocol navigates the latency landscape through its distinctive Expected Consensus (EC) mechanism. While it does introduce latency, particularly during leader election and tipset formation, it is not predicated on synchronized uniformity at each block height. Instead, latency emerges as a byproduct of the required time for elected leaders to broadcast and other network participants to endorse new blocks — a vital process to the assembly of a tipset. This series of actions does incur some processing delays when compared to D.A.G.G.E.R.'s framework. However, Filecoin's design also facilitates an ongoing evolution of the blockchain, ensuring that transactions are incorporated at regular intervals. The system is designed to alleviate the need for unanimous, real-time affirmation of each block by the entire network, thus preserving functionality and ensuring consistent progress, albeit with a moderate increase in latency compared to protocols like D.A.G.G.E.R.

In contrast, D.A.G.G.E.R. eliminates the need for these moments of coordination. Instead, it utilizes a gossiping Directed Acyclic Graph (DAG) structure, where information spreads rapidly from one node to another, like rumors in a crowd, without the need for coordinated rounds. As nodes receive new transaction data, they do not wait for a collective vote to agree on what is true. Each node independently verifies transactions and appends them to the ledger following the same set of rules — this process can be likened to 'implicit voting.' This immediate and ongoing verification across the network means that consensus on the ledger's state is a continuous and automatic process rather than a scheduled event. It's like having a group of accountants who work independently but follow the same accounting principles to maintain consistent books rather than holding a meeting every time they need to add a new entry.

Stated differently, as truth disseminates through the D.A.G.G.E.R. network via a gossiping DAG structure, the consensus on the state of truth is not an orchestrated event (explicit voting); instead, it is inherently understood and accepted (implicit voting), arising from the universal algorithmic substrate that not only binds all nodes but also enables them to independently discern and affirm the veracity of the shared ledger without explicit reconciliation of individual perspectives.

Not only does D.A.G.G.E.R. adopt an asynchronous consensus mechanism, but it also achieves operational modularity that functions holistically outside of a consensus context. Each node's Controller Module works independently to read from and write to the ledger, akin to file uploading in a storage system. This modularity means transactions can be ordered and executed without inducing coordination latency – a paradigm shift from traditional systems where coordinated consensus is central to decision-making. Moreover, the audit and repair process, a separate yet vital part of the D.A.G.G.E.R. operation, showcases its autonomous capabilities, further distinguishing the operational routine from the consensus layer. Randomized audit processes and algorithmically-driven repairs — triggered by data inconsistencies or node failures — proceed unilaterally, without waiting for concert from the rest of the network. This self-healing approach ensures network integrity and availability through efficient and decentralized automatic interventions, underpinning D.A.G.G.E.R. and Shadow Drive's inherently asynchronous operational runtime, a true departure from legacy blockchain systems.

This unique property of D.A.G.G.E.R. is due to its clever application of graph structure (DAG) to the live consensus runtime. This is an edge in efficiency when compared to Filecoin’s runtime and becoming increasingly validated by the data we are collecting from Testnet Phase 1 (and presented herein). If you're recognizing the potential of DAGs in distributed systems - great! These structures are exceptionally adaptable, opening doors to innovative algorithmic strategies and fresh solutions in the world of cryptography. Interestingly, Filecoin uses DAGs as well!

Directed Acyclic Graphs (DAGs)

D.A.G.G.E.R.'s ingenuity lies in its leaderless and asynchronous architectural purity that is enabled by a DAG-based graph representation of gossip and consensus. It enables the network to reach a consensus without synchronized clocks or an over-reliance on message broadcasting. This clever use of an implicit voting system, leveraging the natural information flow within the graph-bases structure addresses the same security concerns that Filecoin's double-layered approach aims to solve, but D.A.G.G.E.R. accomplishes this in a more streamlined and efficient manner. The Filecoin Expected Consensus (EC) model also employs distinctive mechanisms for block finalization that rely on Directed Acyclic Graphs (DAGs), but it implements different methodologies and concepts to achieve consensus:

Filecoin:

- Filecoin’s Expected Consensus (EC) mechanism also leverages the structure of a DAG, which gradually builds certainty over time. Consensus is probabilistic; the chance that a block will be reversed decreases with each new block that builds upon the history.

- A linear sequence of blocks (epochs) extends the chain in DAG format, and if no leader is chosen for a specific epoch, an empty block is added. Each block contributes to the overall weight of the chain, which is a measure of consensus. This weighting process happens as new blocks are added or as current blocks are endorsed. This DAG-based arrangement permits a depth of potential block linkages and pathways, reinforcing the security and finality of the ledger without being confined to a strictly linear chain.

- The process of block finalization does not require explicit, coordinated consensus events. Instead, the decentralized nature of the network allows for nodes to mine and endorse blocks based on the underlying protocol rules.

- A block is finalized when there is a statistical guarantee that the chain containing the block will not be replaced by an alternative history, meaning it has gathered enough endorsements and extensions to be considered the single source of truth.

D.A.G.G.E.R.:

- D.A.G.G.E.R. consolidates consensus by calculating a total order of events through manipulation of a DAG, where events represent individual nodes' transactions or other information.

- All operators maintain a version of the DAG and participate in its growth by gossiping about new events, which are accepted and ordered without central coordination.

- D.A.G.G.E.R. uses implicit voting within the DAG structure, avoiding the transmission of explicit votes. The arrangement of the nodes within the graph and a set of node metadata serve as a record of these votes.

- Finalization in D.A.G.G.E.R. happens when an event (which can contain multiple transactions)—according to the graph's structure and rules—reaches a state where all nodes acknowledge it based on an algorithmic consensus without requiring synchronized agreement moments.

Comparison:

- Both Filecoin's EC and D.A.G.G.E.R. use a DAG, but while Filecoin uses this structure to add weight and ensure probabilistic finality over time, D.A.G.G.E.R. uses the DAG itself as a ledger for transactions, where the graph's connectivity and metadata serve as the means for reaching consensus on their order and finality.

- Filecoin EC creates a decentralized and gradual building of certainty without coordinated consensus events, much like D.A.G.G.E.R. However, Filecoin relies on the extension of blocks and endorsement weight to secure the chain, whereas D.A.G.G.E.R. relies on the direct and constant construction and ordering of its DAG through node interaction and the graph's inherent properties.

- D.A.G.G.E.R. does not require the concept of epoch or block weight for finalization; it uses the entire graph's state, combined with its consensus algorithm, to determine when events (including transactions) are finalized, making the process continuous and inherent in the operation of the network.

- In Filecoin, certainty builds as nodes explicitly endorse existing blocks or add new ones, thus 'signing' them and increasing the chain's weight, whereas in D.A.G.G.E.R., the consensus process and block finalization are embedded in the creation and placement of events within the DAG.

In summary, D.A.G.G.E.R. and Filecoin both achieve decentralized consensus without the need for synchronized events, but they diverge in their core approaches. Filecoin's finalization gains certainty over time with probabilistic consensus, relying on external weights and endorsements, while D.A.G.G.E.R. finalizes transactions in a continuous, inbuilt process that uses the structure of the DAG itself. D.A.G.G.E.R.'s leaderless and bandwidth-efficient design allows for implicit voting based on the events' placement, while Filecoin's linear sequence of epochs requires nodes to explicitly mine or endorse to contribute to chain finality.

These aspects underscore how D.A.G.G.E.R. capitalizes on the DAG structure through a series of sophisticated modules and algorithms to establish decentralized consensus without the need for centralized authority or synchronized timing, to proficiently manage network communication, sequence transactions, and mitigate potential Byzantine errors. The innovative utilization of DAG-based gossip and consensus paves the way for leaderless, asynchronous computation, thus enhancing throughput. The outcome is a resilient and effective consensus framework that is well-suited to meet the requirements of decentralized and distributed storage protocols, such as shdwDrive. Until now, no other permissionless storage protocol has successfully stood up a fully asynchronous DAG-based runtime consensus mechanism that scales throughput in direct proportion to the increasing number of node participants. D.A.G.G.E.R. Testnet Phase 1 marks a significant milestone for Web3 in the testing and benchmarking of such a modernized approach at decentralized storage systems.

Erasure Coding: Tuning for Maximum Efficiency

Data protection and durability are central concerns in any storage protocol. Understanding how erasure coding is used in decentralized storage offers insights into the measures taken to safeguard data against loss and corruption. This section explores the concept and application of erasure coding in the D.A.G.G.E.R. protocol relative to Filecoin's approach, with a specific focus on the scalability benefits introduced by D.A.G.G.E.R.'s hybrid recursive scheme for metadata management.

What is Erasure Coding?

Erasure coding is a method of data protection that divides files into multiple, redundant fragments, or shards, enabling the reconstruction of original data even if some fragments are lost or corrupted. This technique is essential for security, as it enhances data durability and availability across dispersed storage networks.

Erasure Coding in D.A.G.G.E.R:

In the D.A.G.G.E.R. protocol, erasure coding is utilized to ensure data storage is robust and secure, with a pronounced focus on system performance and scalability. There are two levels of erasure coding in D.A.G.G.E.R.: 1) Client-side erasure coding and 2) Internal Metadata Ledger erasure coding and replication.

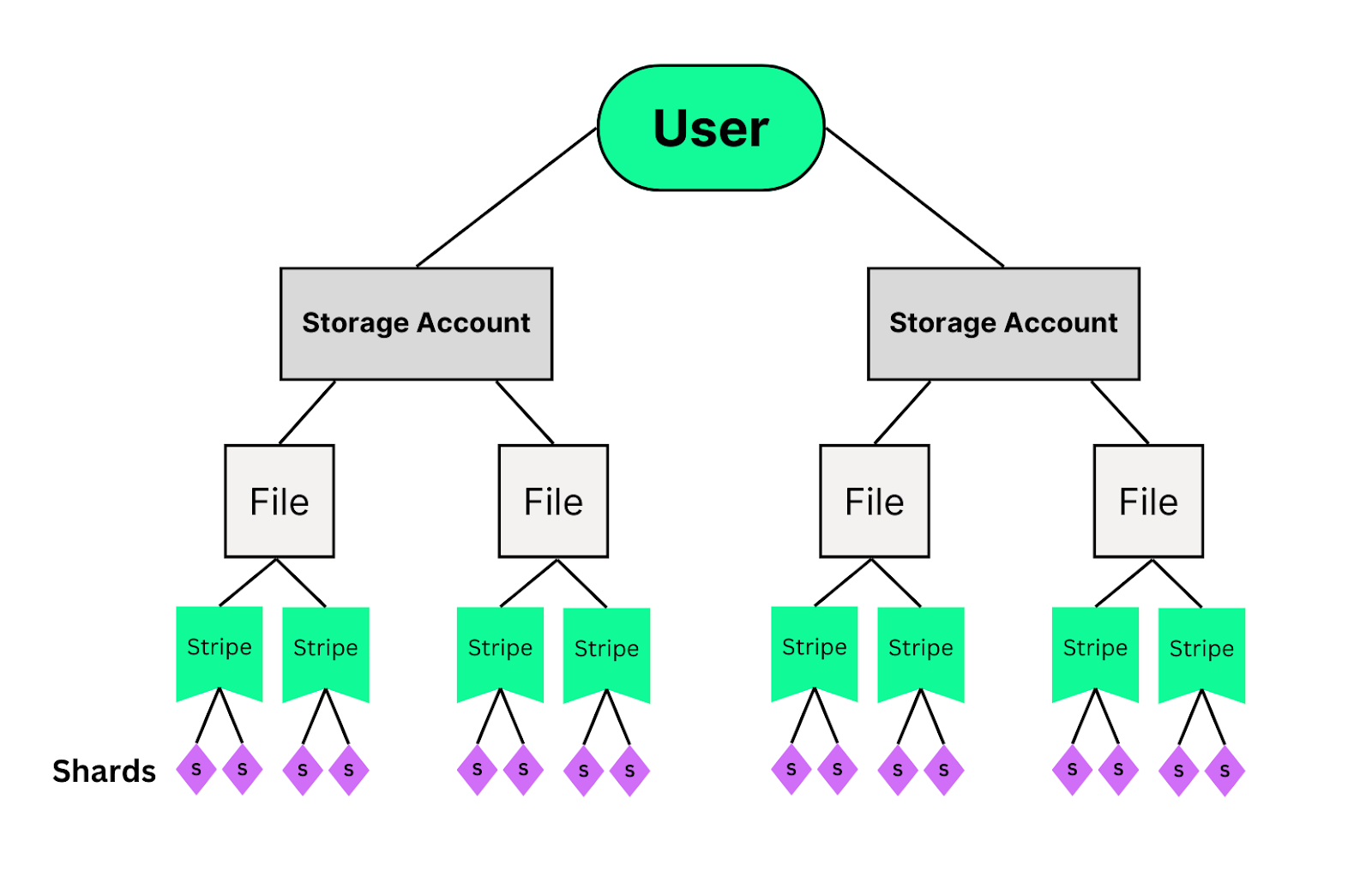

Client-side erasure coding is more straightforward as demonstrated with a visual:

A shdwDrive user can have many storage accounts, each which has their own associated files, each which has their own associated stripes, each which is erasure-coded into shards (denoted with an S for brevity). The shards layer is somewhat opaque to the user; they will interact with them through the Shadow Drive client (Metadata Node) when retrieving shards and rebuilding the data. This is what we mean by “client-side” erasure coding.

However, an additional type of this erasure coding also happens internally within the D.A.G.G.E.R. consensus runtime and its job is to help securely distribute the metadata ledger across the network. Metadata (the information we encode into the ledger about the state of the network) is a crucial component to manage within the network to the extent that too much metadata information can lead to bloat and slowness. D.A.G.G.E.R. breaks ground on an advanced method of improving how metadata is handled and scaled, therefore enabling a more information-rich framework native to the system’s consensus runtime that improves many facets of operations.

Shard Database Erasure Coding:

- Shards client-side data into multiple pieces using an advanced Reed-Solomon erasure coding scheme, requiring lower computational complexity (O(N log N)) for encoding and decoding.

- Designed to be more efficient and scalable compared to traditional erasure coding implementations.

Metadata Ledger Erasure Coding:

Network Metadata Tracking:

- Monitors active network operators, delineating their level of participation within the D.A.G.G.E.R. hierarchy.

- Assigns a unique index to each operator based on their admission sequence, reflecting their storage capacity, both allocated and utilized.

- Manages operator contact information via an ed25519 public key and socket address, facilitating secure and reliable communication.

State of Current Election:

- In permissionless settings, details the ongoing processes for offline node detection and eviction through an actively managed election system.

- Allows any network participant to initiate an election to potentially remove an unresponsive or malfunctioning node, preserving network integrity.

Aggregate Network Status:

- Compiles comprehensive statistics such as the total reserved bytes, number of storage accounts created, and the total files uploaded within the network.

- Keeps a historical count of data loss indicating files and storage bytes irrecoverably lost, which is crucial for network health assessments.

Levelhash Updating:

- Regularly updates the levelhash derived from the D.A.G.G.E.R. protocol, encapsulating the current state and progressive timeline of the metadata ledger.

Storage Account Metadata:

- Catalogs essential data for each storage account, including names, ownership keys, storage quotas, files association, and immutability status.

- Enables swift retrieval of account-specific information for both administrative and operational purposes.

File Metadata Maintenance:

- Manages comprehensive records for each file, including identifiers, ownership linkage, data integrity hashes, and file size parameters.

- Guarantees quick access to file details, facilitating integrity checks and ownership verification.

Shard Metadata Indexing:

- Logs metadata for each data stripe, meticulously recording size, hash values, file associations, and other shard-specific information.

- Ensures the organized and efficient management of shards, which is fundamental for the system's erasure coding and data recovery processes.

QUIC Transport Protocol:

- Leverages QUIC for its proficient multiplexing capabilities, allowing several messages to be interchanged concurrently over the same connection without incurring additional connection overheads.

- QUIC’s low-latency handshakes enhance the speed with which connections are established, significantly reducing communication delays and improving the protocol's overall responsiveness, further improving internal erasure coding and metadata ledger replication speeds.

D.A.G.G.E.R.’s Custom Hybrid Recursive Scheme for Metadata Scalability:

- An entirely custom algorithmic augmentation supporting metadata ledger replication

- Addresses the challenge of metadata bloat in distributed ledger technologies, which is especially prevalent when managing numerous small files.

- Instead of replicating the entire ledger's metadata across all nodes, the hybrid recursive scheme stores metadata as a recursively encoded file within the network. This process continues until the metadata's size is manageable, allowing for full replication without overwhelming the network.

- The parameters of the scheme, such as stripe size (S), Reed-Solomon scheme depth (D:P), and replication threshold (R), are adjustable, providing a balance between replication overhead and retrieval latency.

- This method ensures that the overhead associated with large metadata sets is minimized, maintaining the integrity and accessibility required for a robust distributed ledger system.

Replication, not Erasure Coding in Filecoin:

Filecoin's primary redundancy mechanism is not erasure coding but replication, as seen in its PoRep and PoSt systems. However:

- Clients have the option to erasure-code their data before making storage deals on Filecoin, distributing the coded shards across different miners for an additional layer of redundancy that functions at the application level rather than being a core protocol feature.

- Once the storage deal is in place with storage miners followed by implementation of the Data Transfer Module, the client-side application using Filecoin can begin transferring their erasure-coded shards to miners applying their own metadata indexing scheme for retrieval at a later time.

Propagation of the Ledger:

- Filecoin does not use erasure coding for ledger replication, instead ensuring robustness through a process called ChainSync.

- ChainSync is vital to blockchain operation and security, synchronizing the blockchain by retrieving and validating new blocks and messages.

- When nodes join the Filecoin network, they subscribe to the /fil/blocks and /fil/msgs GossipSub topics to listen for new blocks.

- Nodes synchronize starting from the trusted checkpoint, typically the GenesisBlock, and BlockSync fetches blocks up to the current height to maintain up-to-date status with the main chain.

Filecoin utilizes a suite of networking protocols to maintain synchronization:

- GossipSub: A pub-sub protocol that propagates messages and blocks to keep nodes updated with new blocks and messages.

- BlockSync: A protocol that synchronizes specific blockchain segments, focusing on block ranges between specified heights.

- Hello Protocol: Enables nodes to exchange their chain heads when they first connect, effectively sharing sync status.

Additional protocols like Bitswap and GraphSync complement the synchronization process:

- Bitswap is used when GossipSub does not deliver blocks as expected.

- GraphSync provides an efficient method for fetching parts of the chain.

- As libp2p nodes, Filecoin nodes utilize these protocols to maintain sync and support the distributed nature of the blockchain.

- All Filecoin nodes must implement ChainSync protocols to be recognized as proper implementations of the Filecoin specification.

Comparative Insights

The utilization of erasure coding and other redundancy measures highlights key differences between D.A.G.G.E.R. and Filecoin:

- D.A.G.G.E.R. incorporates erasure coding into its very architecture, optimizing for metadata replication and efficient data transactions within the QUIC protocol framework. This is a principal design to maximize network throughput.

- The innovative hybrid recursive scheme developed by GenesysGo demonstrates D.A.G.G.E.R.'s commitment to scalability and efficiency, particularly in metadata management, allowing the system to dynamically adjust to the size and demands of large data demands.

- Filecoin allows for erasure coding but as an optional, client-side strategy only, focusing on data replication and periodic proofs of storage for internal ledger replication.

These differing strategies show that while both protocols value data security, they apply distinctive internal approaches to achieve this goal. D.A.G.G.E.R.’s use of a more sophisticated erasure coding scheme native to its system, further enhanced by a novel hybrid recursive algorithm, is a design that seeks to overcome past bottlenecks that have plagued the pioneers of the past.

Data Transfer: Online, not Off-line

In a period of time where data transfer rates and networks grow exponentially in capability and continually strive to break physical limitations, the very notion of physically mailing hard drives is an anathema to the foundational principles of decentralized protocols and a shock to any system hoping to be future-proof. Filecoin, however, acknowledging the limitations of its network, resorts to such physical transference for sizable datasets though “Offline Data Transfer” —a stark concession that speaks volumes regarding an issue that any decentralized storage protocol will likely face when dealing with extremely large datasets. Another issue, as described in their “Engineering Filecoin’s Economy”, is a matter of the time it takes to move massive volumes of data (Petabytes) due to limited bandwidth. Filecoin suggests that if you are deceased before your data transfer had the time it needed to complete, then you should probably just mail the drives instead. They have a point.

ShdwDrive and D.A.G.G.E.R. present an alternative paradigm, aligning with the modern expectation for seamless digital data transfer irrespective of dataset size. Built with a forward-looking architecture, shdwDrive proactively addresses the challenges of scaling to handle increasing volumes of data, focusing on a robust network framework where node proliferation corresponds to throughput enhancement. This approach signifies a progressive shift towards matching the actual throughput to the potential of digital networks, rather than being dictated by physical logistics. D.A.G.G.E.R. will continue to embody this philosophy by aspiring to create a durable and adaptive infrastructure, capable of augmenting its data processing capabilities as needed and embracing the growth of data-reliant modern technology sectors such as Artificial Intelligence.

Virtual Machine Integration

The differentiation in virtual machine strategy is another inflection point where Filecoin's choice of IPC—tethered to the tested-yet-aging Tendermint core—highlights a reliance on legacy consensus layers. This is sensible given their deep roots within legacy ecosystems such as Ethereum and Cosmos, where Filecoin adopts the developer frameworks of those chains and underlying consensus technologies that align.

ShdwDrive is not as bound by this gravity of traditional frameworks and makes a calculated choice of Solana integration. Rather than constructing a custom smart contract virtual machine atop D.A.G.G.E.R. for the development of shdwDrive, we instead capture the synergies of the Solana Virtual Machine—taking advantage of Solana's innovation with respect to the extensibility it provides. This harmonious marriage between shdwDrive's storage capabilities and SVM's modularity endows applications with a scalable ecosystem where storage and computation coalesce seamlessly. Using the SVM, shdwDrive directly plugs into Solana's ecosystem, gaining more immediate access to compatibility with its prodigious transaction processing, developers, and applications, aiding market adoption speed. The alliance envisions a seamless merger of storage and computation, dramatically streamlining the engagement for decentralized applications and leveraging Solana's inherent efficiencies to scale organically alongside demand.

Nodes

The node architectures differ between both protocols significantly. For the purposes of this article, we will primarily focus on their operational functions rather than their tokenomics and incentive structures (which will be covered in the future). The Filecoin protocol is a blockchain-based payment and operator incentive structure that utilizes the same software that powers IPFS. For this reason D.A.G.G.E.R. (the consensus core of shdwDrive) is comparable more so to the combination of IPFS, Filecoin’s consensus, and the operations of Filecoin nodes.

Filecoin Nodes

- Nodes within the Filecoin network are categorized based on the services they provide, and a node's classification arises from its service offerings. There is a foundational level of service called chain verification, which is mandatory for any node participating in the Filecoin network. Above this, additional services define the node's role further.

Chain Verifier Node:

- The most basic form of a node, responsible strictly for verifying the blockchain by synchronizing and validating new blocks.

- This type of node forms the backbone of the network, ensuring the integrity and continuation of the blockchain.

- Although it plays no active part in the market transactions of the network, it is essential for maintaining consensus.

Client Node:

- Builds upon the Chain Verifier Node to interact with Filecoin's blockchain for applications.

- Enables applications such as exchanges or decentralized storage platforms to use Filecoin.

- Engages with the Storage and Retrieval Markets to initiate and manage storage and retrieval deals.

- Performs data transfers through the Data Transfer Module, facilitating the movement of data into and out of the Filecoin network.

Retrieval Miner Node:

- Extends the Chain Verifier Node's capabilities to participate in the Retrieval Market.

- These nodes answer requests to deliver stored data to clients, earning fees for retrieval services.

- Utilizes the Data Transfer Module to efficiently move data during retrieval operations.

Storage Miner Node:

- The most complex node type, embodying all the functions necessary for block validation, creation, and blockchain extension.

- Participates actively in the mining process through sector pledging, block creation, and proof submissions.

- Acts as a Storage Market Provider by securing deals to store client data.

- Uses the Data Transfer Module to manage the flow of data into their storage systems from clients.

D.A.G.G.E.R. Nodes

Metadata Nodes (Data Oracles):

- These nodes interact with the user frontend to process file uploads.

- They determine where erasure-coded stripes of data should be sent using deterministic RNG (Random Number Generation).

- They play an integral role in the consensus of D.A.G.G.E.R., agreeing on the Wield Nodes that should receive the files.

Wield Nodes:

- Function as on-chain storage device layers (OSDs), maintaining the ledger and user file data shards allocated by the Metadata Nodes.

- Interact with each other using a networking protocol to enhance data transmission stability.

- They generate mathematical proofs that serve as the basis for auditing storage quality and security.

Auditor Nodes:

- These nodes validate the system by participating in Proof of Data Possession (PDP), validating storage correctness in a decentralized manner.

- Introduction of Mobile Auditors to enable phone users to conduct audits via a mobile app.

- Mobile audits, due to their lightweight nature, facilitate a broad and ubiquitous verification layer, harnessing idle mobile device resources to secure the network.

- For their work in maintaining system integrity, Auditor Nodes are rewarded with $SHDW tokens from an emissions pool.

File Lifecycle: Storing & Retrieving Data

Filecoin employs a dual-marketplace model, consisting of both storage and retrieval markets, to facilitate the matching of clients who wish to store data with storage miners willing to provide storage space. This model is key to creating an economic incentive for storage and ensures that data is reliably stored over time.

Storage Marketplace Dynamics:

In the storage marketplace, clients and miners interact through a public orderbook which lists three types of orders: "bid orders" from clients who want to store data, "ask orders" from storage miners offering their storage space, and "deal orders" which represent matched bids and asks.

- Order Submission: Clients and miners submit their orders by transmitting a transaction to the Filecoin blockchain. The client's bid orders provide details such as the size of the storage request, price, and the desired time frame for storage, while the miner's ask orders specify the amount of space offered and the price.

- Order Matching: When the conditions of a bid and an ask align, the client sends the data to the miner, and both parties sign a deal order. This matched order is then registered in the orderbook.

- Settlement: After the match, storage miners are responsible for "sealing" their sectors containing the client's data, regularly generating proofs of storage, and submitting them to the blockchain to confirm that data is being correctly maintained over time.

Retrieval Marketplace Mechanics:

The retrieval marketplace is distinct from the storage market in that it is designed to operate off-chain to avoid the blockchain from becoming a bottleneck for rapid data retrieval requests. As such, clients find retrieval miners who are serving the required data pieces and directly negotiate on pricing. The participants in this market have only a partial view of the orderbook, relying on a network of participants to gossip orders. This design allows for faster data retrieval, as the blockchain is not used to run the orderbook.

- Off-Chain Orderbook: Clients and retrieval miners engage in a network where they can discover each other and agree on the terms for retrieving data without relying on the blockchain to record these transactions.

- Payment Channels: To facilitate rapid data transfer, Filecoin supports payment channels for off-chain payments. This allows clients to receive data as soon as they submit their payment and miners to receive payment without waiting for blockchain confirmation.

The storage marketplace in Filecoin is software-driven, with an automated order-matching process that does not solely rely on human-to-human interactions. The incorporation of an orderbook and smart contracts streamlines deal-making with an engine that matches orders based on predefined criteria. This system automates much of the negotiation and deal selection process, with the blockchain serving to validate and register the agreed-upon contracts.

In contrast, the retrieval market is primarily facilitated by off-chain interactions, emphasizing speed and efficiency. For retrieval, the marketplace mechanics do not involve the Filecoin blockchain for order book management, allowing for a more swift file retrieval without the latencies inherent to Filecoin’s on-chain transactions.

D.A.G.G.E.R. / shdwDrive:

ShdwDrive facilitated by D.A.G.G.E.R. employs a user-friendly cloud-like model for storing and retrieving data that stands in contrast to Filecoin's dual-marketplace model. D.A.G.G.E.R. seeks a more automated and immediate data management experience akin to cloud interfaces, dropbox experiences, and Google Drive-style applications. This objective of CDN-level streamlined user experience differs markedly from Filecoin’s approach.

Storing a File on shdwDrive v2:

The D.A.G.G.E.R. Hammer application emulates a few of the upcoming shdwDrive v2 features - namely the dropbox-style file uploader. ShdwDrive v2 is part of the D.A.G.G.E.R. roadmap, which can be reviewed here. We chose to highlight a dropbox-style file uploader because it represents the pinnacle of throughput capabilities while showcasing a gratifying user experience. Of absolute importance is the fact that the D.A.G.G.E.R. Hammer and the file uploader are directly connected to the Testnet Phase 1 independent operator network. There is no data-caching middle layer or other hidden feature improving speed. Anyone can visit this site and test sending transactions and uploading files to experience firsthand D.A.G.G.E.R. executing its asynchronous operational runtime in real-time. This represents our commitment to a true Web3 cloud-like experience for storage that follows these key themes:

- Immediate Data Access: One of the distinguishing features of shdwDrive v2 is the rapid access to files. Users can upload files and gain access to them within seconds, bypassing the complex order-matching and deal-making steps typical of Filecoin’s storage marketplaces.

- Cloud-Like User Experience: shdwDrive aims to provide a seamless experience that matches that of a CDN. Its architecture is designed to handle large volumes of data at high throughput, capable of emulating a Web3 version of Google Drive.

- Payment Integration: Transactions within shdwDrive are executed using Solana blockchain-based payments, integrating seamlessly into the user experience without interrupting the storage process and providing an incredibly fast and non-disruptive settlement time for storage service payments.

Retrieving a File on shdwDrive v2:

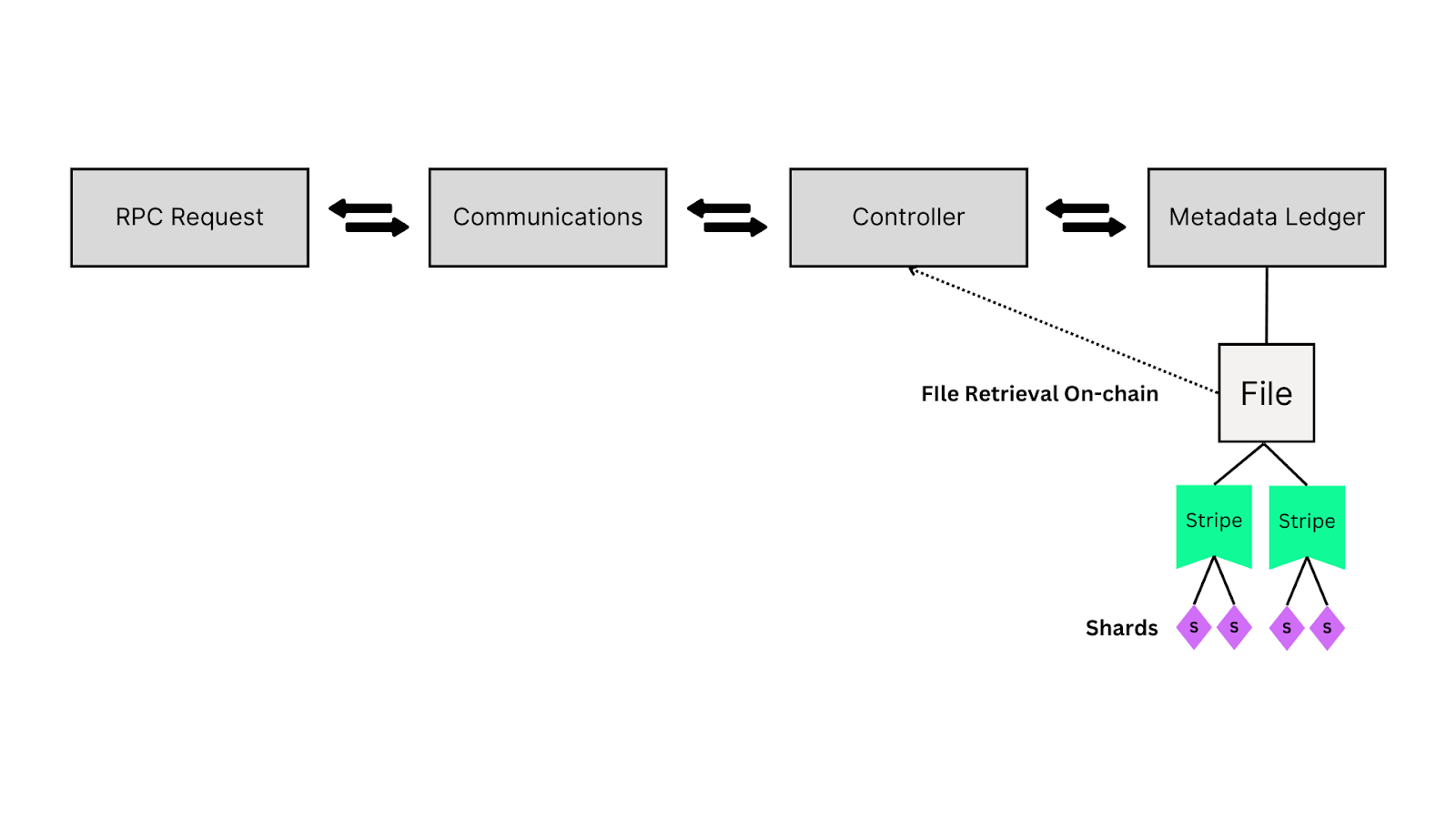

Retrievals in Shadow Drive are handled via RPC requests, representing on-chain transactions within the D.A.G.G.E.R. consensus framework. This is in direct contrast to Filecoin’s off-chain retrieval marketplace. The following is how D.A.G.G.E.R. and shdwDrive provide a solution for keeping data retrieval on-chain:

- Communications and Control Modules: RPC requests are initially sent to the communications module and subsequently forwarded to the controller module. This controller is responsible for reading the metadata ledger to obtain file location information and details regarding shard distribution necessary for file reconstruction.

- Event-Driven Consensus: File retrieval requests are not merely transactions but are treated as events published to the D.A.G.G.E.R. consensus runtime. This ensures that all actions taken within the system are part of an audited, secure, on-chain flow.

- Audited Retrieval: The architecture ensures that files are not just retrieved but also subjected to a prior audit before they are delivered. This adds a layer of security, making sure that every retrieval is verified within the blockchain's immutable framework.

Visualizing on-chain file retrieval and the interactions with the client-side erasure coding scheme via D.A.G.G.E.R. modules:

In summary, Shadow Drive, powered by D.A.G.G.E.R., distinguishes itself from Filecoin by providing a much more direct and immediate user experience for both storing and retrieving data while keeping file retrieval on-chain. It avoids the complexities of a marketplace model, focusing instead on creating a robust, high-performing, and user-friendly cloud-like experience that aligns with the ideals of Web3 infrastructure. The system's retrieval process aligns with the storage's straightforward nature by leveraging on-chain transactions for real-time auditing and event-driven consensus, ensuring a secure and transparent data flow.

What emerges from this comparison is clarity on design philosophies: one of D.A.G.G.E.R. that champions the ethos of instant-data-availability, the other of Filecoin that hinges on the mechanics of a marketplace with retrieval being off-chain. As we move further into an era where data is the lifeblood of technology, the choice between these architectures will not be one of preference but of necessity—for the digital world demands nothing short of instantaneity, a domain where shdwDrive and D.A.G.G.E.R. stand poised to deliver through forethought and principal design. For Filecoin, there will be 3rd party adapters, pinning services, and gateways run by projects that help it out along the way.

The decision of which system to adopt hinges upon the application's needs and the user's appetite for engagement. For those who yearn for the immediacy and no-fuss dependability akin to traditional cloud services, the architectural ethos of shdwDrive and D.A.G.G.E.R. offers a compelling and pioneering frontier.

Throughput

Filecoin Performance Metrics:

- Transaction Speed: ~30 seconds per blocktime for financial transfers.

- Confirmation Time: ~1 hour for 120 blocks for high-value transfers.

- Data Storage: 5-10 minutes for a 1 MiB file from deal acceptance to on-chain appearance.

- Sector Sealing: ~1.5 hours for a 32 GB sector on minimum hardware.

- Data Retrieval: Fast retrieval method (unsealed copy of the data) can be assumed to take less than ~2 minutes; unsealing for retrieval can take ~3 hours for a 32 GiB sector on minimal hardware.

Round-Trip to Store and Retrieve 1Mib file:

- Best case ~5-7 minutes and 30 seconds for a 1 MiB file (using fast retrieval services)

- worst case ~4 hours and 10 minutes for a 1 MiB file

Note: These metrics are from https://docs.filecoin.io/networks/mainnet/network-performance at the time of publishing this article.

ShdwDrive/D.A.G.G.E.R. Performance Metrics:

- Peak TPS: 50,000 transactions/second on a specified machine configuration (ideal network).

- Surge TPS: ~20,000 - 38,000 transactions/second under live Testnet Phase 1 conditions (version 0.2 - 0.3 with independent operators ranging from 20-30 node cluster size).

- Real-world TPS: ~3,000 transactions/second under real-world stress, churn, etc.

- Data Storage: 2-8 seconds for a 1MiB file uploaded to D.A.G.G.E.R. Hammer Demo, which emulates parts of the shdwDrive v2 storage application.

- Erasure Coding Time: 0.018 ms per 1 MiB per core, effectively negligible with horizontal scaling.

- Snapshot Download: Between 10ms and 50ms for a 1 MiB file.

- Block Sync Time: Between 30ms and 300ms, depending on latency.

- Block Validation Time: Sub-500 nanoseconds to 20ms, indicating minimal delay.

- Time to Finality: 70ms to 650ms, with an average of ~273ms, (on live Testnet Phase 1 30 node global cluster which powers D.A.G.G.E.R. Hammer Demo site)

- Data Retrieval: 1-3 seconds for a 1MiB file to be retrieved by URL on D.A.G.G.E.R. Hammer Demo site

Internal Runtime to Ingest and Finalize 1Mib (no Application, DNS, or Payment Latency):

- Best case: ~0.1 seconds

- Worst case: ~0.7 seconds

Round-Trip to Store and Retrieve 1Mib including all non-D.A.G.G.E.R. Latencies:

- Best case ~ 3 seconds for a 1 MiB file

- worst case ~12 seconds for a 1 MiB file

Note: Benchmarks as of D.A.G.G.E.R. version 0.2. These numbers are subject to change and evolve as we rigorously stress test the network in Testnet Phase 1. Speeds vary depending on bandwidth and proximity to a Wield node operator.

Comparison

The Filecoin network handles financial transactions with speeds similar to Ethereum. Data storage and retrieval times can vary significantly based on whether fast retrieval options are available and the hardware used for sealing and unsealing data but generally speaking, they are slow. As stated, developers are expected to make use of IPFS pinning services or gateway protocols to speed up retrieval, whether by their own means or third-party API wrappers that cache or centralize your data via web2 “hot-layers.” By every measure of the Filecoin protocol itself, it’s a slower system relative to more modern approaches. This is reinforced by the effort of Protocol Labs to create InterPlanetary Consensus (IPC) to breathe life back into the horizontal scalability of filecoin where it matters the most - web3 applications and their need for on-demand data.

On the other hand, shdwDrive/D.A.G.G.E.R. boasts impressive transaction processing speeds and negligible times for erasure coding, data download, and node synchronization. The system is optimized for data-center-level speeds and server-grade hardware, resulting in extremely fast round-trip times for data storage and retrieval. It demonstrates high throughput and low latency, making it well-suited for environments that require rapid access to data. Anyone can test these speeds in real-time via D.A.G.G.E.R. Hammer or join the discord and see our Testnet Phase 1 Wield node operators working with GenesysGo engineers for improvements.

*Please note that since it's a Testnet, we are continually stress-testing and refining it; if the Testnet is temporarily unavailable, it's likely due to the deployment of an upgraded version of D.A.G.G.E.R., which will necessitate a cluster reboot.

Modernizing Storage for Developers and their Applications

The introduction of IPC (InterPlanetary Consensus) by Protocol Labs (the makers of IPFS and Filecoin) is an effort to position Filecoin as a more viable platform for scaling applications - specifically those building on top of the Filecoin Virtual machine (FVM) and the Ethereum FVM (FEVM). Despite its innovative intent, the need for IPC highlights the fundamental limitations of Filecoin when it comes to delivering an adequate data platform for application scalability. The IPC's approach to scaling involves the implementation of recursive subnets and the integration of the Tendermint consensus core, an engine that dates back nearly a decade.

An Ideal Match

D.A.G.G.E.R. and shdwDrive, in conjunction with the high-performance of Solana Blockchain, reflect a forward-thinking approach to the entire end-to-end web3 landscape. Here, a novel and powerful consensus engine, D.A.G.G.E.R., pairs perfectly with the cutting-edge technology stack of the Solana ecosystem to offer a seamless, capable, modern solution for Solana’s data needs. The Solana Virtual Machine (SVM) and Solana Payment Processing components work in harmony with D.A.G.G.E.R. to ensure incredibly fast operations and streamlined development experience.

This stack is devoid of the complexities and inherent slowness observed in the IPC strategy:

- Speed and Performance: D.A.G.G.E.R. + Solana boasts unrivaled processing speeds with low-latency operations.

- Simplified Consensus Mechanism: Unlike the IPFS + Filecoin + IPC stack, which necessitates multiple, older consensus engines working in sequence, D.A.G.G.E.R. relies on a singular, state-of-the-art consensus mechanism that is attuned to the modern demands of blockchain technology and can soar at Solana speeds.

- Lean and Efficient Interoperability: Scalability is not achieved through cumbersome layers of subnets or Layer 2 (sidechains) but instead through efficient design and integration with Solana’s high-capacity network and the Solana Virtual Machine.

- Developer-Friendly Ecosystem: Developers are granted a powerful yet simple environment optimizing for performance and ease of use, unburdened by multi-chain interoperability concerns that can overcomplicate application deployment and operation.

Thus, the match between D.A.G.G.E.R. and Solana signals not just a technological kinship but a shared vision for the future of scalable, decentralized applications, data rich applications. It merges the swiftness and precision of modern consensus methods with the raw power of Solana’s blockchain, forming a stack that is as performant as it is elegant.

Conclusion

As we ring the bell on this friendly tussle between D.A.G.G.E.R. and Filecoin, there are key takeaways we want to underscore—elements that are not only pivotal but set a new bar for what decentralized storage can offer.

First and foremost, D.A.G.G.E.R. embodies an advanced, forward-thinking design—a paradigm tailored for efficiency and streamlined for exceptional performance. This framework democratizes cloud revenues, rewarding node operators and mobile Auditors alike. By innovatively decoupling transaction ordering from execution within the consensus runtime, the architecture achieves remarkable efficiency. It facilitates real-time data storage and retrieval, reminiscent of traditional cloud offerings yet enriched with the compelling attributes of decentralization.

Harnessing the capabilities of the Solana blockchain, shdwDrive captures the forward momentum of a blossoming ecosystem and positions itself harmoniously with the demanding tempo of modern-day solutions. The result is a decentralized storage protocol that epitomizes agility, providing top-notch performance on which developers and enterprise clients can confidently depend. Our vision is to offer an unparalleled service that not only solidifies trust but also intuitively aligns with user expectations—a platform where robustness is embedded within its core and user-centric design prevails.

With tremendous respect for the strides made by Filecoin and the Protocol Labs team, we celebrate the contrasts with D.A.G.G.E.R. and shdwDrive. While we stand on the shoulders of giants, appreciative of the groundwork laid before us, we also look to the horizon with a vision to innovate and refine the decentralized storage experience, ensuring it is fit for the rapidly evolving demands of tomorrow’s digital ecosystem.

As we conclude another friendly showdown, it is clear we continue to bolster our toe-to-toe might with concrete data, a live and evolving testnet that is accessible to the public, and an appetite for more time in the arena. Thanks for reading and keep an eye on Twitter for when we announce our next matchup in the D.A.G.G.E.R versus series.